Crawler Traps: How to Identify and Avoid Them

Crawler traps—also known as "spider traps"—can seriously hurt your SEO performance by wasting your crawl budget and generating duplicate content.

The term "crawler traps" refers to a structural issue within a website that results in crawlers finding a virtually infinite number of irrelevant URLs.

To avoid generating crawler traps, you should make sure that the technical foundation of your website is on-par, and that you are using proper tools that can quickly detect them.

Crawler traps hurt a crawler's ability to explore your website, hurting the crawling- and indexing process and ultimately your rankingsRankings

Rankings in SEO refers to a website’s position in the search engine results page.

Learn more.

What are crawler traps?

In SEO, "crawler traps" are a structural issue within a website that causes crawlersCrawlers

A crawler is a program used by search engines to collect data from the internet.

Learn more to find a virtually infinite number of irrelevant URLs. In theory, crawlers could get stuck in one part of a website and never finish crawling these irrelevant URLs. That's why we call it a "crawl" trap.

Crawler traps are sometimes also referred to as "spider traps."

Why should you worry about crawler traps?

Crawler traps hurt crawl budget and cause duplicate contentDuplicate Content

Duplicate content refers to several websites with the same or very similar content.

Learn more.

Crawler traps cause crawl budget issues

Crawl budget is the number of pages that a search engineSearch Engine

A search engine is a website through which users can search internet content.

Learn more is willing to visit when crawling your website. It's basically the attention that search engines will give your website. Keep that in mind, and now think about crawler traps and how they lead only to pages with no relevance for SEO. That's wasted crawl budget. When crawlers are busy crawling these irrelevant pages, they're not spending their attention on your important pages.

"But aren't search engines smart enough to detect crawler traps?" you ask.

Search engines can detect crawler traps, but there's absolutely no guarantee that they will. And besides, in order for them to realize they're in a crawler trap, they need to go down into that crawler trap first, and by then it's already too late. The result? Wasted crawl budget.

If search engines are able to detect the crawler traps, they'll crawl them less over time and it becomes less of an issue over time. If you don't want to take any chances, we recommend tackling the crawler traps head on.

When talking about crawler traps we talk a lot about crawl resource and how problematic and detrimental they can be to crawl resource, but they can also act as sink nodes in the distribution of PageRank internally. When we look at a website as a tree diagram, or node and edge graph a sink node is a node (or page) with little or no outgoing links, and they can leak PageRank. Obviously we don't sculpt PageRank on websites like we used to 10 years ago, but it's still important to address the issue and make sure we're not missing out on an opportunity to preserve PageRank and better internally connect to our "money pages".

Crawler traps cause duplicate content issues

It's worth noting that crawler traps aren't just a threat to your crawl budget; they're also a common reason why websites's are suffering from duplicate content issues. Why? Because some crawl traps lead to having lots of low-quality pages accessible and indexable for search engines.

Crawler traps are real and search engine crawlers hate them. They come in different forms, for example I've seen: redirect loops due to mistyped regex in .htaccess, infinite pagination, 1,000,000+ pages on a sitewide search on keyword "a" and a virtually infinite amount of attributes/filters added to a URL due to faulty faceted navigation. Monitor your site for crawler traps and fix them as they can hurt your SEO!

A great brand site which is continuously being optimized for SEO doesn't stand the slightest chance to compete in Google when its crawl budget isn't being managed. That is if undesirable, redundant, outdated landing pages are crawled more frequently then optimized, conversion driving landing pages. Which is why two things are essential: 1) continuously saving AND preserving raw web server logs and 2) conducting a technical SEO audit including a server log analysis by an external third party at least once every twelve months. With critical volumes of relevant data and an objective partner that doesn't shy away from laying bare a sites shortcomings, crawler traps can be avoided and SEO efforts overall greatly enhanced.

How to identify crawler traps

While it's sometimes hard for crawlers to identify crawler traps, it's easy for a person who knows the website. You only need to know what URLs should be crawled and then evaluate whether URLs that shouldn't have been crawled were in fact crawled.

Be on the lookout for the following URL patterns:

Checkout- and account-related

- admin

- cart

- checkout

- favorite

- password

- register

- sendfriend

- wishlist

Script-related

- cgi-bin

- includes

- var

Ordering- and filtering-related

- filter

- limit

- order

- sort

Session-related

- sessionid

- session_id

- SID

- PHPSESSID

Other

- ajax

- cat

- catalog

- dir

- mode

- profile

- search

- id

- pageid

- page_id

- docid

- doc_id

There's four ways to go about this:

- Run a crawl

- Advanced search operators in Google

- Check URL parameters in Google Search Console

- Analyze log files

Run a crawl of your own

Crawl your website with ContentKing and go through your dataset looking for the URL patterns mentioned above, and also scroll through your whole list of URLs. Sorting based on relevance score (so that the least important URLs are listed at the top) is also a great way to quickly find URLs that shouldn't be crawled.

Typical things you'll find:

- URLs with query parameters (containing a ? and/or a &)

Examples:http://www.example.com/shoes?sex=men&color=black&size=44&sale=noandhttp://www.example.com/calendar/events?&page=1&mini=2015-09&mode=week&date=2021-12-04 - URLs with repetitive patterns

Example:http://www.example.com/shoes/men/cat/cat/cat/cat/cat/cat/cat/cat/cat/ - Pages with duplicate titles, meta descriptions, and headings

Looking for pages with duplicate titles, meta descriptions, and headings is a great way to find potential crawl traps.

When hunting for crawler traps, don't forget to check with different user-agents. I've seen sites where certain URLs are redirected for Googlebot, but not for visitors, or where bots see one canonical tag while a browser renders something different. In some cases a bot will even see a canonical tag that points to a page, but when the bot hits that page they get redirected back to the original page, creating a canonical/redirect loop only bots can see. If you aren't checking your site with different user agents, you might not be seeing the whole picture.

Website visualizations, using a tool such as Gephi for instance, can help identify these traps. They'll usually appear as a large cluster or a long tail in a network diagram.

Advanced search operators in Google

Use advanced search operators in Google to manually find the URL patterns mentioned above.

Using the site: operator, you tell search engines to search within a certain domain only, while inurl: indicates you're only looking for pages with a certain URL pattern.

Example queries:

site:example.com inurl:filtersite:example.com inurl:wishlistsite:example.com inurl:favoritesite:example.com inurl:cartsite:example.com inurl:searchsite:example.com inurl:sessionid

Please note that you can also combine this into one query. In this example we've combined all six of the URL patterns above for amazon.com.

Check URL parameters in Google Search Console

When you head over to Crawl > URL Parameters in Google Search ConsoleGoogle Search Console

The Google Search Console is a free web analysis tool offered by Google.

Learn more, you'll find all the URL parameters Google's found while crawling your site.

Analyze your log files

Another useful source for finding these URL patterns: going through your web server's log files. These files are a record of all requests made to your server, including both visitors and search engines / other bots. Search for the same URL patterns as we mentioned above.

eCommerce websites in particular tend to suffer from crawler traps. Issues with pagination, facets, mismanagement of sunset products are among numerous things that can send crawler through an infinite loop. Log files can reveal a lot, but so can regular crawling and auditing. If you're having difficulty getting new products crawled and indexed fast, it might be a sign your site is suffering from crawler traps.

eCommerce websites in particular tend to suffer from crawler traps. Issues with pagination, facets, mismanagement of sunset products are among numerous things that can send crawler through an infinite loop. Log files can reveal a lot, but so can regular crawling and auditing. If you're having difficulty getting new products crawled and indexed fast, it might be a sign your site is suffering from crawler traps.

Common crawler traps and how to avoid them

Common crawler traps we often see in the wild:

- URLs with query parameters: these often lead to infinite unique URLs.

- Infinite redirect loops: URLs that keep redirecting and never stop.

- Links to internal searches: links to internal search-result pages to serve content.

- Dynamically generated content: where the URL is used to insert dynamic content.

- Infinite calendar pages: where there's a calendar present that has links to previous and upcoming months.

- Faulty links: links that point to faulty URLs, generating even more faulty URLs.

Below we'll describe each crawler trap, and how to avoid it.

URLs with query parameters

In most cases, URLs with parameters shouldn't be accessible for search engines, because they can generate virtually infinite URLs. Product filters are a great example of that. When you can have four filter options for eight filtering criteria, that gives you 4,096 (8^4) possible options!

Why do parameters get included into URLs?

For example to store information, such as product filtering criteria, session IDs, or referral information.

Example URL with product filtering criteria:http://www.example.com/shoes?sex=men&color=black&size=44&sale=no

Example URL with session ID:http://www.example.com?session=03D2CDBEA6B3C4NGB831

Example URL with referral information:http://www.example.com?source=main-nav

Advice:

Avoid using query parameters in URLs as much as possible. But if you do need to use them, or deal with them in general, then make sure they aren't accessible to search engines, by excluding them using the robots.txt file or by setting up URL parameter handling in Google Search Console and Bing Webmaster Tools.

The exception here is if you have query parameters in URLs that have lots of links. In order to allow search engines to consolidate signals through canonical URLs to the canonical version of these URLs, they need to be crawlable. In that case, don't disallow these URLs using robots.txt file.

How do you fix and avoid this crawler trap?

If search engines have already indexed pages on your site with parameter URLs, follow the steps below, in the right order:

- Communicate to search engines that you want these pages not to be indexed by implementing the robots noindex directive.

- Give search engines some time to recrawl these pages and pick up on your request. If you don't have the patience to wait for this, request that they hide these URLs, using Google Search Console and Bing Webmaster Tools.

- Use the robots.txt file to instruct search engines not to access these URLs. If this isn't an option for some reason, use the URL parameter handling settings in Google Search Console and Bing Webmaster Tools to instruct Google and Bing not to crawl these pages.

- Additionally, when these URLs are introduced via links: be sure to add the nofollow link attribute to these links. This will result in search engines not following those links.

Keep in mind that if you jump to step 3 right away, search engines will never be able to pick up on the robots noindex directive (because you tell them to keep out) and will keep the URLs in their indexes a lot longer.

However, if search engines haven't yet indexed any pages with parameter URLs, then just follow steps 3 and 4 out of the steps mentioned above.

Crawler traps can seriously damage a site, but it depends on the type of trap the crawler is in. Whilst infinite spaces such as calendars, which have no end, and dynamically generated parameters such as those on eCommerce sites can be very problematic types of crawler paths, the worst kind of crawler trap I've ever seen (and experienced) is that which pulls in 'logical (but incorrect) parameters'.

This can really tank a site over time. It's that serious.

Infinite redirect loops

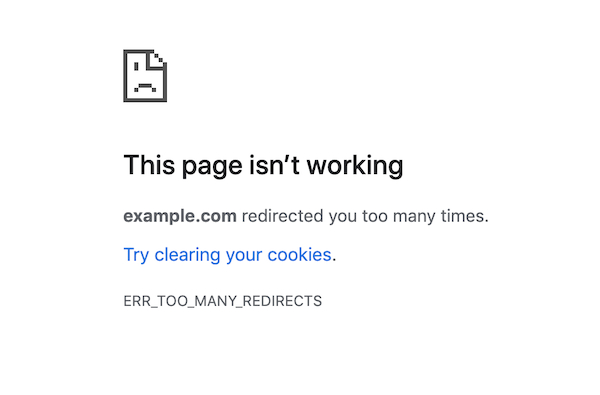

An infinite redirect loop is a series of redirects that never ends. When you encounter a redirect loop in Google Chrome, this is what you'll see:

Redirect loops cause visitors to be stuck on a site and will likely make them leave. Google will usually stop following redirects after three or four hops, and this hurts your crawl budget. Please note that they may resume following those redirects after some time—but you should still avoid this situation.

How are redirect loops created? Redirect loops are often the result of a poor redirect configuration. Let's say all requests to URLs without a trailing slash are 301-redirected to the version with the trailing slash, but because of a mistake, all requests to URLs with a trailing slash are also 301-redirected to the version without the trailing slash.

How do you fix and avoid this crawler trap?

You can fix redirect loops by fixing your redirect configuration. In the example above, removing the 301 redirects that send requests to URLs with a trailing slash to the version without the slash will fix the redirect loop—and it also makes for a preferred URL structure that has always has a trailing slash at the end.

Links to your internal search

On some sites, links to internal search results are created to serve content, rather than having regular content pages. Links to internal search results are especially dangerous if the links are generated automatically. That could potentially create thousands of low-quality pages.

Let's take an example: you keep track of your website's most popular search queries, and you automatically link to them from your content because you think they are helpful for users. These search-result pages may contain few results, or none at all, which leads to low-quality content being accessible to search engines.

How do you fix and avoid this crawler trap?

Linking to internal search-result pages is only rarely better than having regular content pages, but if you really think it's useful to show these links to visitors, then at least make these internal search-result pages inaccessible to search engines using the robots.txt file.

Example:

Disallow: /search/ #block access to internal search resultSearch Result

Search results refer to the list created by search engines in response to a query.

Learn more pagesDisallow: *?s=* #block access to internal search result pages

If using the robots.txt file is not an option for some reason, you can set up URL parameter handling in Google Search Console and Bing Webmaster Tools as well.

Dynamically inserted content

One way to dynamically insert content in a page is to insert it through words from the URL. This is very tricky, because search engines can then find a lot of pages with low-quality content.

Let's use an example to illustrate this crawler trap.

www.example.com/pants/green/ has an H1 heading that says: "Buy green pants online at example shop". And it lists actual green pants. Sounds fine right?

But what if www.example.com/pants/pink/ returns HTTP status 200 and contains an H1 heading that says "Buy pink pants online at example shop"… but shows no actual pink pants?

Yep, that would be bad.

This is only an issue if search engines can find these types of pages, and if pages that have no results also return HTTP status 200.

How do you fix and avoid this crawler trap?

There a few things you can do to fix this issue:

- Make sure there are no internal links to these type of pages.

- Because you can't control external links, make sure that pages that shouldn't be accessible and that show no results return an HTTP status 404.

Infinite calendar pages

Many websites contain calendars for scheduling appointments. That's fine—but only if the calendar is implemented correctly. The problem with these calendars is that they often place the dates to be displayed into the URL, and meanwhile they let you go far, far into the future. Sometimes even thousands of years.

You can see that this crawler trap is similar to URLs with query parameters, which we've already discussed. But it's so common that it makes sense to have a dedicated section for it.

A typical URL structure for a calendar would be:

www.example.com/appointment?date=2018-07for July 2018www.example.com/appointment?date=2018-08for August 2018www.example.com/appointment?date=2018-09for September 2018- Etc.

This leads to an avalanche of pages that are uninteresting for search engines, so you want to keep them out.

How do you fix and avoid this crawler trap?

There's a few things you can do to keep calendars from becoming issues:

- Make sure to provide only a reasonable number of future months for appointments.

- Make the calendar URLs inaccessible to search engines through the robots.txt file.

- Add the

nofollowlink attribute to your "Next Month" and "Previous Month" links.

Faulty links

One type of faulty link can create a crawler trap as well. This often happens when people use relative URLs and they omit the first slash.

Let's look at an example link:

<a href="shop/category-x">Category X</a>

The issue here is that the first slash before 'shop' is missing. This would have been correct:

<a href="/shop/category-x">Category X</a>

What happens if you use the wrong link? Browsers and search engines will both append the shop/category-x part after the current URL, leading to: example.com/some-page/shop/category-x/ rather than the intended example.com/shop/category-x/. On example.com/some-page/shop/category-x/, the link to this page would become example.com/some-page/shop/category-x/shop/category-x/, and on that one, example.com/some-page/shop/category-x/shop/category-x/shop/category-x/, on to infinity.

When these incorrectly linked pages return an HTTP status code 200 ("OK") instead of 404 ("Page not found"), there's trouble. Search engines will then try to index these pages, leading to lots of low-quality pages getting indexed. (If the incorrectly linked pages just return an HTTP status code 404, then it's not a massive issue.)

This crawler trap is especially disastrous if it's included in global navigation elements, such as the main navigation, the sidebar, and the footer. Then all the pages on the site will contain this kind of incorrect link—including the pages that you incorrectly linked to.

How do you fix and avoid this crawler trap?

There's a few things you need to do to fix or avoid this crawler trap:

- Monitor your website for incorrect links. If you do, you'll notice the dramatic increase in new pages found, and you'll quickly find the issue.

- Make sure that pages that don't exist return an HTTP status code 404.

Best practices to avoid crawler traps overall

The best practices for avoiding crawler traps are two-fold:

- Make sure the technical foundation of your website is on-par, and

- Have tools in place that find crawler traps quickly.

Making sure the technical foundation of your website is on par

If you stick to the following around your technical foundation, you'll prevent crawler traps easily:

- Make sure that pages that don't exist return an HTTP status code 404.

- Disallow URLs that search engines shouldn't crawl.

- Add the

nofollowattribute to links that search engines shouldn't crawl. - Avoid the dynamic inserting of content.

Having tools in place that find crawler traps quickly

Having the right tools in place to find crawler traps quickly will save you a lot of headaches and potential embarrassment. What should these tools do? They should monitor your website for:

- A sudden increase in pages and redirects, and

- Duplicate content.

If crawler traps are found, you want this information to reach you fast. So you need alerts.

Frequently asked questions about crawler traps

What is a crawler trap?

Crawler traps—also known as "spider traps"—are structural issues within a website that hurt a crawler’s ability to explore your website. The issues result in crawlers finding a virtually infinite number of irrelevant URLs leading to the creation of duplicate content and a loss in crawl budget.

What are the most common crawler traps?

The most common crawler traps are URLs with query parameters, infinite redirect loops, links to internal searches, dynamically generated content, infinite calendar pages, and faulty links.

How do you find a crawler trap?

To identify a crawler trap on your website, it pays off to have in-depth knowledge knowledge of its structure. You need to know what URLs should be crawled and then evaluate whether URLs that shouldn’t have been crawled were in fact crawled. Besides, you should implement proper tools that can quickly detect a sudden increase in pages and redirects on your website, as well as a duplicate content. Tools that can provide you with instant alerts enable you to react quickly and save your SEO performance.

How do you avoid a crawler trap?

The best practices to avoid crawler traps include making sure that pages that do not exist return an HTTP status code 404. You should also disallow URLs that shouldn’t be crawled by search engines, add nofollow attribute value to links that should not be followed by crawlers, and avoid using dynamically generated content.

![David Iwanow, Head of Search, [object Object]](https://cdn.sanity.io/images/tkl0o0xu/production/5f4996305d653e2847aefbe94b078a20c02ab41c-200x200.jpg?fit=min&w=100&h=100&dpr=1&q=95)

![Paul Shapiro, Head of Technical SEO & SEO Product Management, [object Object]](https://cdn.sanity.io/images/tkl0o0xu/production/fd312eea58cfe5f254dd24cc22873cef2f57ffbb-450x450.jpg?fit=min&w=100&h=100&dpr=1&q=95)