AI Crawlability: Ensuring Your Site is Visible in AI Search

Succeeding in the age of AI search means moving past outdated SEO workflows. AI crawlers operate differently from Googlebot; they can’t process JavaScript, and visit sites on a different cadence. That makes it impossible for traditional scheduled crawls to keep up and offer clear insights into your AI crawlability. This gap creates critical blind spots, leaving your brand invisible to answer engines and unable to establish itself as a trusted source for the new way people find information.

Learn how Conductor's real-time monitoring platform provides the 24/7 intelligence needed to master AI crawlability. Discover how to instantly identify technical blockers, optimize your site's health, and build the foundation for a successful AEO strategy in the age of AI.

The rise of AI-driven search has introduced a new, non-negotiable requirement for online visibility: AI crawlability.

Before your brand can be mentioned, cited, or recommended by an answer engine, its crawlersCrawlers

A crawler is a program used by search engines to collect data from the internet.

Learn more first have to be able to find and understand your content.

If they can't, your brand is effectively invisible in AI search, no matter how strong your traditional SEO has been. This guide breaks down this new challenge, exploring how AI crawlers work, what blocks them, and how you can get definitive visibility into whether your site is being crawled and understood by AI.

How AI crawlers work differently from Googlebot

It’s important to understand how AI crawlers differ from traditional crawlers used by Google or Bing and why relying on your same SEO workflows and insights won’t provide the intelligence you need to maximize your presence in AI search.

AI crawlers don’t render JavaScript

One major difference between crawlers is in how they approach JavaScript . JavaScript (JS) is a programming language commonly used to create interactive features on websites. Think: navigation menus, real-time content updates, and dynamic forms. Brands will often rely on JS to enhance user experienceUser Experience

User experience (or UX for short) is a term used to describe the experience a user has with a product.

Learn more or deliver personalized content.

Unlike Googlebot, which can process and render JavaScript after its initial visit to a site, most AI crawlers don’t execute any JavaScript. Generally, this is due to the high resource cost associated with rendering dynamic content at scale. As a result, AI crawlers only access the raw HTML served by the website and ignore any content loaded or modified by JavaScript.

That means if your site relies heavily on JavaScript for key content, you need to ensure that the same information is accessible in the initial HTML, or you risk AI crawlers being unable to interpret and process your content properly.Imagine you’re a brand like The Home Depot and you use JavaScript to load key product information, customer reviews, or pricing tables. To a site visitor, these details appear seamless. But, since AI crawlers don’t process JavaScript, none of those dynamically served elements will be seen or indexed by answer engines. This significantly impacts how the content is represented in AI responses, as important information may be completely invisible to these systems.

Crawl speed & frequency differences

Based on research into our own content performance, we’re starting to see that AI engines are crawling our content more frequently than traditional search engineSearch Engine

A search engine is a website through which users can search internet content.

Learn more crawlers, which is a pattern we’re seeing with our customers as well. While this isn’t a hard and fast rule, the difference was stark in instances where answer engines crawled more than search engines, with AI sometimes visiting our pages over 100 times more than Google or Bing.

That means newly published or optimized content could get picked up by AI search as early as the day it’s published. But just like in SEO, if the content isn’t high-quality, unique, and technically sound, AI is unlikely to promote, mention, or cite it as a reliable source. Remember, a first impression is a lasting one.

Why making a good first impression with AI crawlers is more important than traditional crawlers

With traditional search engines like Google, you have a safety net. If you need to fix or update a page, you can request that it be re-indexed through Google Search ConsoleGoogle Search Console

The Google Search Console is a free web analysis tool offered by Google.

Learn more. That manual override doesn't exist for AI bots. You can't ask them to come back and re-evaluate a page.

This raises the stakes of that initial crawl significantly. If an answer engine visits your site and finds thin content or technical errors, it will likely take much longer to return—if it returns at all. You have to ensure your content is ready and technically sound from the moment you publish, because you may not get a second chance to make that critical first impression.

Check out our recent article to learn how to adapt your holiday SEO strategies—and which technical optimizations to prioritize—for improved AI search crawlability & visibility during peak retail holidays like Black Friday and Cyber Monday.

Are scheduled crawls enough to safeguard AI crawlability?

Before the AI search boom, many teams relied on weekly or even monthly scheduled site crawls to find technical issues. That wasn’t a great solution for SEO monitoring, but it’s now no longer tenable given the speed and unpredictability of AI search crawlers. An issue blocking AI crawlers from accessing your site could go undetected for days, and since AI crawlers may not visit your site again, that may actively damage your brand's authority with answer engines long before you see it in a report. That’s just another reason why real-time monitoring is so critical for success in AI.

Spotlight: Conductor case study

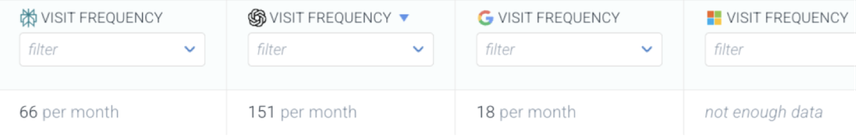

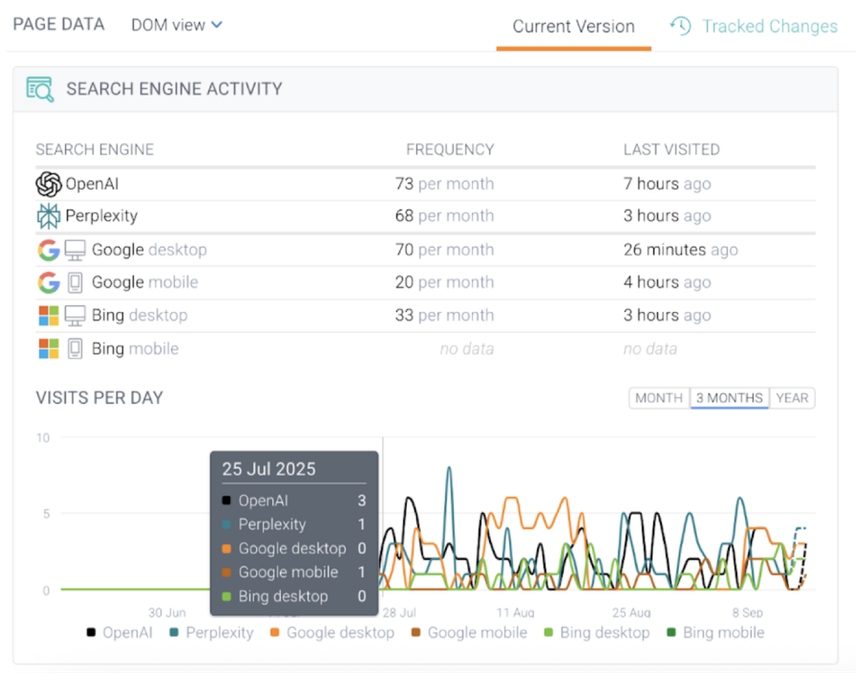

Take a piece of our content as an example. During our research, we leveraged Conductor Monitoring’s AI Crawler Activity feature and found that ChatGPT and Perplexity not only crawled the page more frequently than Google and Bing, but they also crawled the page sooner after publish than either of the traditional search engine crawlers.

This screenshot, pulled five days after publishing the page from Conductor Monitoring, shows that ChatGPT visited the page roughly eight times more often than Google had, and Perplexity visited about three times more often. That’s stark and speaks to how quickly your content can be cited and how often updates and optimizations might be picked up by AI/LLM crawlers.

The line graph at the bottom of the above screenshot shows the frequency of crawls by each engine dating back to the publish date, July 24. Although Google mobile crawled the content first on July 24, within 24 hours, Perplexity had crawled it the same number of times, and ChatGPT had crawled it three times.

This breakdown shows the frequency of crawler visits across search and answer engines as well as when the most recent visit was.

As you can see, Google has largely caught up to the answer engines in terms of crawl frequency, with Google desktop visiting the page a bit more than Perplexity and a bit less than ChatGPT each month.

Bing and Google mobile, however, still show far fewer visits than either answer engine.

Key takeaways

- New content can get crawled and picked up by answer engines and LLMs as early as the first day it publishes. So creating new content, optimizing what you have, and tracking that content’s performance to ensure crawlability is critical for safeguarding and building your brand’s authority and visibility in AI.

- LLMs can crawl your content much more frequently than traditional search engines. There are likely a ton of reasons for this, and it’s not entirely clear what triggers an answer engine to crawl a site or piece of content. That’s where real-time monitoring makes such a big difference. It can show you which pages are being crawled, which aren’t, and how often, so that you can find opportunities to optimize.

- If AI isn’t crawling your site frequently, there are likely issues with the content under the hood. Audit your content’s quality and technical health, along with overall site health, to make sure that your content can be easily crawled and indexed by LLMs.

Want to learn more about what it takes to get picked up by LLMs and improve your citation velocity? Check out our guide to getting cited in AI search.

What blocks AI crawlers and how do you fix it?

A variety of technical issues can block crawlers from properly accessing, indexing, and understanding your content. Specifically, these factors will impact an AI bot’s ability to crawl your content:

- Over-reliance on JavaScript: Unlike traditional search bots, the majority of AI crawlers do not render JavaScript and only see the raw HTML of a page. That means any critical content or navigation elements that depend on JS to load will remain unseen by AI crawlers, preventing answer engines from fully understanding and citing that content.

- Missing structured data/schema: Using Schema, AKA structured data, to explicitly label content elements like authors, key topics, and publish dates is one of the single most important factors in maximizing AI visibility. It helps LLMs break down and understand your content. Without it, you make it much harder for answer engines to parse your pages efficiently.

- Technical issues: Are links on your site sending visitors to a 404 page? Is your site loading slowly? Technical issues like poor Core Web Vitals, crawl gaps, and broken links will impact how answer engines understand and crawl your site. If these issues remain for days or weeks, they will prevent AI from efficiently and properly crawling your content. That will then impact your site’s authority, expertise, and AI search visibility.

- Gated/restricted content: One of the major challenges facing content marketers right now is ensuring their gated content is discoverable. Traditionally, marketers would make gated assets non-indexable. Now, with AI search, brands are rethinking this to strike a balance between building authority and generating leads.

How do you know if your site is crawlable?

You can’t optimize something if you don’t know it’s broken. You need insights into how your content is performing and any blockers that may be standing in the way of getting your website and content crawled by AI/LLMs.

Invest in a real-time solution to track AI crawler activity

With traditional SEO, you can check server logs or Google Search Console to confirm that Googlebot has visited a page. For AI search, that level of certainty just isn’t there. The user-agents of AI crawlers are new, varied, and often missed by standard analytics and log file analyzers.

That’s why the only way to know if your site is truly crawlable by AI is to have a dedicated, always-on monitoring platform that specifically tracks AI bot activity. Without a solution that can identify crawlers from OpenAI, Perplexity, and other answer engines, you’re left guessing. Visibility into your site’s crawlability is the first step; once you can see AI crawler activity on your site, you can leverage the benefits of real-time data to optimize your strategy.

What are the benefits of real-time monitoring for AI crawlability?

Since AEO/GEO and AI answer engine visibility are still in their infancy, the industry is experimenting with ways to optimize for AEO and become a go-to trusted source among answer engines.

Conductor Monitoring is the only platform built to help you navigate this shift with 24/7 intelligence and a suite of features that offer insights into if, when, and where AI bots are crawling your content.

With Conductor Monitoring, you can see:

- AI crawler activity: Tracking crawler visits shows you whether LLMs are coming back to your site, or if they visited it once and haven’t returned. This is what we illustrated with the conductor.com case study, where we showed how quickly AI was crawling our Profound comparison landing page.

- Crawl frequency segments: This feature clues you into which of your pages could benefit from optimizations. If an LLM hasn’t visited in hours or even days, it could mean there are technical or content-related issues within the page, making them very unlikely to be cited in AI search.

- Schema tracking: You can create a custom segment in Conductor to be alerted anytime a page is published that doesn’t have relevant schema markup. This gives you insight into whether your key pages have schema or whether you should add it to make it easier for answer engine bots to crawl and understand your content.

- Performance monitoring (Core Web Vitals): Customers with a Conductor Lighthouse Web Vitals integration can view their UX performance score. If this number is low, your UX could use improvement, which will make it less likely for answer engines to crawl your content.

One of our customers, a market-leading industrial technology company, has a massive site with multiple subdomains that they were having some difficulty overseeing. Some portions of the site worked really well, while others had room for improvement. This led to an overall inconsistent site performance and UX. With Conductor Monitoring, the team was able to monitor each of its subdomains, identify performance issues, and resolve them before their AI search visibility was impacted. - Real-time alerts: Real-time alerting notifies you of any issues that arise on any pages on your site, the moment they’re detected. From there, these issues are prioritized based on impact so you can take action on what matters most and keep your technical health strong.

[Conductor Monitoring] is really helpful for us to catch bugs a lot earlier because currently, my scheduled crawls only happen once a week. There are instances where I don't find problems until five to six days later because they happen directly after my weekly crawl on Monday mornings. This real-time solution helps train other people to catch things much faster than what we've done previously.

The real-time difference: Conductor Monitoring customer case study

One of our customers is a global leader in automation, helping to transform industrial manufacturing. As a global company, they have over 1 million distinct webpages and operates in more than 30 different locales.

It was a huge undertaking to crawl and monitor all of those pages on their own, especially considering the different languages and nuances of each locale. As a result, it would take the team days just to crawl their English US locale pages, which led to large blind spots in their audits and resulted in issues going unnoticed for extended periods of time. By the time they found the issues, their performance and visibility had already been affected in AI search and traditional search engines.

The team decided to leverage Conductor Monitoring, which crawled and monitored their content 24/7 across 1M+ pages, along with complex business and product segments. Conductor Monitoring alerted the team to any issues as they appeared, even prioritizing the issues to triage based on business impact. This made it seamless for the team to identify issues and take action to resolve them. Altogether, Conductor Monitoring helped the team reduce technical issues by 50% and improve their discoverability for answer engines.

Quick wins to boost AI crawlability

Here are a few light lift initiatives you can employ to improve the chances of your content being crawled and understood by AI crawlers, and, in turn, increase citations and mentions in AI search.

- Serve critical content in HTML to ensure it's visible to crawlers that don't render JavaScript.

- Add schema markup, like article Schema, author Schema, and product Schema, to your high-impact pages to make it easier for answer engine bots to crawl and understand them.

- Ensure authorship and freshness by including author information, leveraging your own internal thought leaders and subject matter experts, and keeping content updated. An author signals to LLMs who created the content, helping establish expertise and authority.

- Monitor Core Web Vitals, as your performance score speaks directly to user experience. If your UX isn’t optimized, answer engines are less likely to mention or cite it.

- Run ongoing crawlability checks with a real-time monitoring platform to catch issues before they impact your visibility.

All of this comes down to making sure you’re keeping an eye on your site from a technical and UX perspective. AI is changing a lot about how people search and interact with brands online, but it’s not changing the fact that answer engines and search engines want to drive users to expert and authoritative websites that are technically sound.

Ensuring AI crawlability in review

The search landscape has fundamentally changed. Gone are the days when you could rely on scheduled crawls and traditional ranking tracking to understand your online performance. As we've seen, answer engines move fast, and your brand's visibility can change in an instant. Staying ahead of the curve requires a new level of agility and insight that yesterday's tools can't provide.

That's where a proactive AEO strategy, powered by real-time intelligence, makes all the difference. By keeping a constant pulse on AI crawler activity, performance scores, schema implementation, and author signals, you can stop guessing and start making data-driven decisions that protect and grow your presence in AI search.

Success in this new era isn't just about fixing what's broken; it's about building a resilient digital presence that answer engines trust and promote. By leveraging the real-time monitoring features we've covered, you can get a single source of truth for your website’s technical health and AI crawlability, turning reactive fire drills into a proactive strategy for sustainable growth.