Everything You Need to Know about Prompt Engineering

Generative AI has been one of the hottest topics of the year so far, and a byproduct of that is everyone talking about how to get the most out of it. When using AI tools, sometimes the results you get from a simple request are not what you expected. It’s easy to blame the tool in that scenario, but what about the prompt you started with? Could that be improved?

In fact, many people are realizing that optimizing AI prompts is the best way to improve the output AI tools provide. Essentially, better prompts = better results from AI. And so the concept of prompt engineering was born.

As SERPs and social media feeds continue to be saturated with content, hot takes, and think pieces about generative AI, you’ve probably heard the term prompt engineering used a few times, and when it comes to getting the most out of generative AI , it’s a very important concept. But before you sign up for a prompt engineering crash course or race to hire a prompt engineer, let’s dive into what prompt engineering is and how it can help you take full advantage of AI content generators.

What is prompt engineering?

Prompt engineering is the process of creating, testing, and refining prompts to effectively use AI content generators and large language models (LLMs).

As impressive as generative AI tools like ChatGPT , Google Bard , Bing , and Claude.ai are great at pumping out content quickly, a drawback is that these tools can provide incorrect, dated, or irrelevant information.

In essence, prompt engineering is the process of finding the most effective combination of words to get the result you want from a generative AI tool. Really, we each try to find the right combination of words to get our point across every day. For example, you could ask someone: “What’s the best desk chair?” but without any context as to what you prefer in a desk chair or how much you're willing to pay, the person will probably give you a vague and subjective answer. In this instance, just like with ChatGPT and other tools, it helps to be more specific to get the most helpful response.

Why is prompt engineering important?

Prompt engineering is important because it helps you use generative AI more efficiently and ultimately get more out of it. Yes, generative AI has made a lot of manual tasks much faster, but if it takes heavy revisions or full-on rewrites to get your desired result from AI, how much time have you really saved?

Prompt engineering also has the potential to make your AI content generation processes more scalable, as well as increase the consistency of the quality and tone of the results over time. If you input a prompt into ChatGPT and get exactly the result you were looking for, you can save the prompt for yourself or your team members to use in the future in a dedicated, prompt library .

How do AI content generators work?

Despite what you’ve been told by doomsday prophets, AI content generators and LLMs cannot think. Generative AI tools are powered by LLMs, which are logic strings, not fully sentient robots that are thinking and speaking for themselves.

Essentially, LLMs are fed billions of pieces of content from across the internet, and from there, they predict what word should follow another word based on their immense backlog of information. For instance, if you ask ChatGPT to tell you a story, there’s a good chance it will start with the phrase “Once upon a time…” because, based on its backlog of content, the probability of a story beginning with that phrase is high.

Important things to consider when prompt engineering

Let’s walk through the elements that make up an AI prompt, as well as some techniques to help you craft the most effective prompt for each of your tasks.

Elements of a prompt

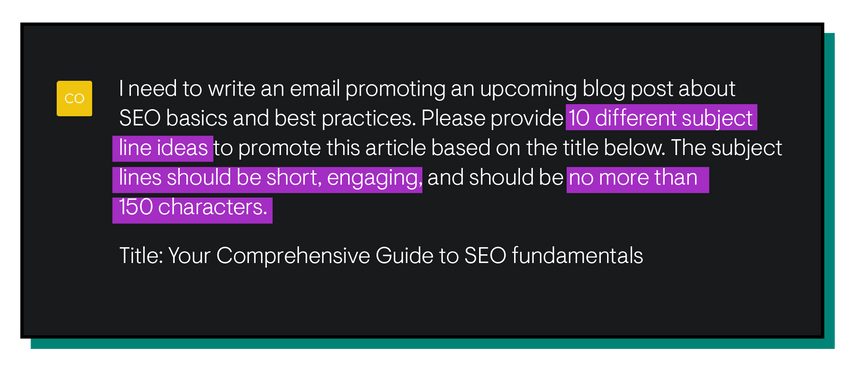

On the surface, a prompt may seem as simple as telling AI what you want it to do, whether that’s sorting data, creating content, generating an image, or helping with research. And while providing a command is a significant component, it’s only one of the four main aspects to consider when engineering prompts.

- Instructions

This refers to the task you need the LLM to complete. It provides a description of the job that needs to be done. The more specific you are, the better the results tend to be.

- Context

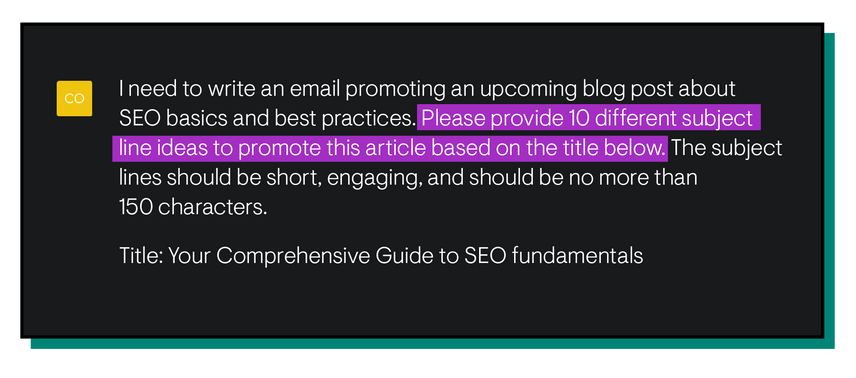

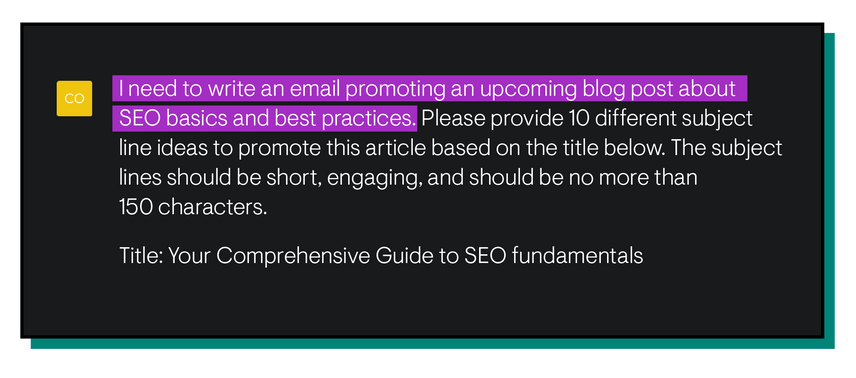

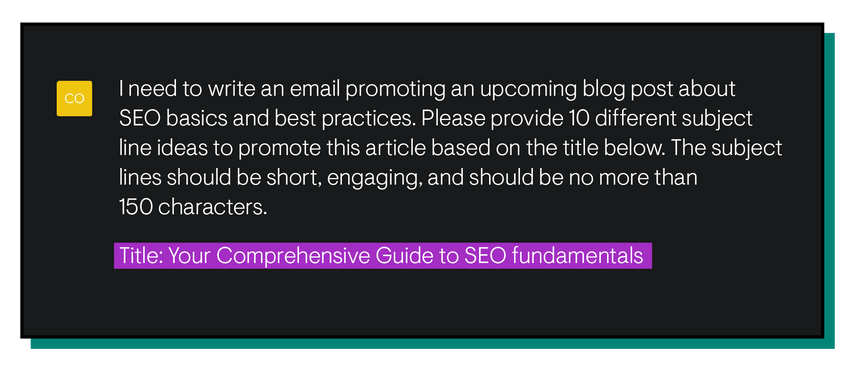

Context essentially refers to the why of your request. This could include information like the target audience for a piece of content, details about a larger campaign that something is a part of or even the kind of format you need the work in. For example, if you need help writing subject lines for promo emails, asking for 10 subject lines would be your instructions, and the context would be the purpose, tone, and audience of the emails, along with a key takeaway the email copy should provide. All of this helps the LLM to narrow down what content to draw from to make the result more specific to your ask.

- Input data

Related to the context of your ask, input data refers to the actual content that an LLM will read and base their work directly on. This could be a piece of existing content that you need summarized, a set of data points you need organized, or just examples that can help AI get closer to the desired result. Going back to our example above, if you need subject lines for a promo email, you could paste the email you’ll be sending into ChatGPT to add context. That promo email copy would be your input data.

- Output indicators

This is a fancy way of saying the format or structure you need the work completed in. This could include information like a specific word count, the number of bullet points, or how information is ordered. Back to the subject line example again, if I want the subject lines to be a particular length, I could ask for a maximum of 150 characters per subject line. That requirement would be an output indicator.

What makes a strong prompt for AI content generators?

Let’s walk through some actual strategies for improving your prompt engineering.

Trial and error

This is really the heart of prompt engineering. Throw in a prompt, see what the result is, change the prompt, and evaluate the new result. Rinse and repeat. Keep it simple, try it out, and see where it takes you.

Take the time to play with different AI tools too, and compare the results of different models. Google Bard could give a completely different answer than ChatGPT does for the same prompt. In fact, sometimes, the same LLM can give different responses to the same prompt. Experiment with what works for each, and remember to save your old prompts in a prompt library as you go. Even if it didn’t give the desired result, you could use it to refine and optimize other prompts going forward.

Problem formulation

This is a fancy way of saying: describe the problem you’re looking to solve in its simplest form. AI content generators like ChatGPT are great at checking the boxes of a meta descriptionMeta Description

The meta description is one of a web page’s meta tags. With this meta information, webmasters can briefly sketch out the content and quality of a web page.

Learn more, for instance, but it doesn’t necessarily understand why you want to create one. Break down the problem into its simplest form, and then start explaining how AI can help.

Provide examples

AI content generators powered by LLMs like ChatGPT provide better results when the request is more specific. Again, you get out what you put in when it comes to AI. With that in mind, it can be helpful in many cases to provide an example of the result you’re looking for to give a tool a nudge in the right direction. For example, this could include providing the email copy from a previous campaign to give a content generator an idea of the structure, length, tone, or even the content of a new email should be. In fact, some tools, like Claude.ai, even allow you to attach pdfs and other documents to your prompt to provide context to the model.

Prompt priming

Prompt priming essentially refers to laying the foundation for an AI tool’s understanding of a topic. For instance, before asking ChatGPT to write copy for a P2P email, first, try asking it to tell you what makes a P2P email unique. This helps establish elements of what you are looking to achieve and how the AI can help. Try this out for yourself as an experiment: ask ChatGPT to create something, then do the same thing but prime your prompt first and compare the differences.

Use prompting tools

Sometimes, you can feel overwhelmed by the possibilities of generative AI and don’t know how to start your question. That’s when it helps to see what has worked for other people. Tools like AIPRM crowdsource and compile effective prompts from real AI power users to give content creators a jump start on their prompts and give a boost to their prompt library.

Effective prompt engineering techniques

Now that you understand the elements that make up a prompt, let’s dive into different ways people have been thinking about prompt engineering and what techniques may work best depending on your output needs.

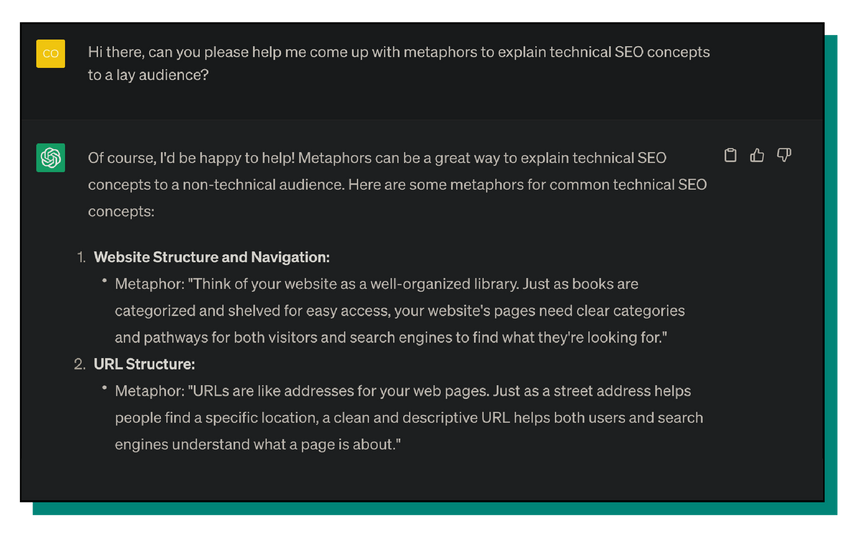

Zero-shot prompt

A zero-shot prompt refers to a prompt with no context or input data, just a request for information or a direct question. Zero-shot prompting only requires the LLM to draw from its own backlog of information to complete the request. Think of zero-shot prompting as almost the equivalent of asking Google something. For example, asking ChatGPT for some metaphors to help explain SEO principles to a lay audience would be zero-shot prompting.

One-shot prompt

Since zero-shot prompting provides no context or examples to the LLM, one-shot prompting provides—you guessed it—one piece of context or example. This is especially effective when you need the model to understand a specific pattern or format to complete the request, like a math problem or equation. For example, you could provide an AI with a piece of content on a Google update and ask it to summarize it into bullet points for you.

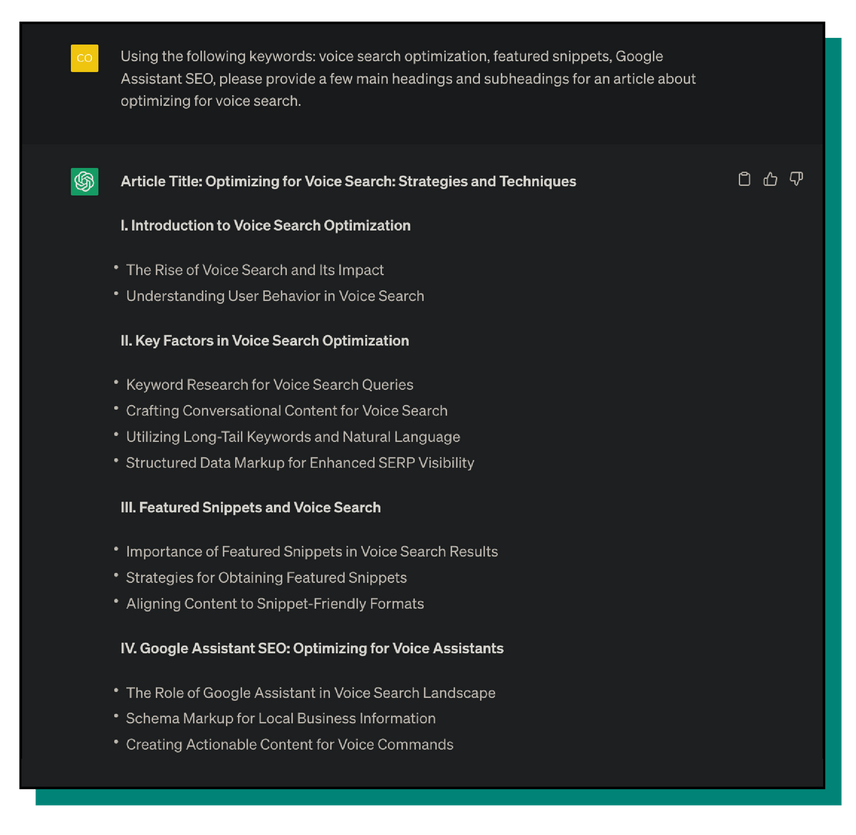

Few-shot prompt

A direct extension of one-shot prompting, few-shot prompts give the model more context and examples to complete a task. This is particularly effective if the results from one-shot prompting aren’t cutting it. An example of few-shot prompting is providing examples of keywords and queries you’d like to rank for with a piece of content about voice search and ask the AI to provide a few main headings and subheadings.

You get out of LLMs what you put into them. More context, in general, is usually better at keeping the work on topic.

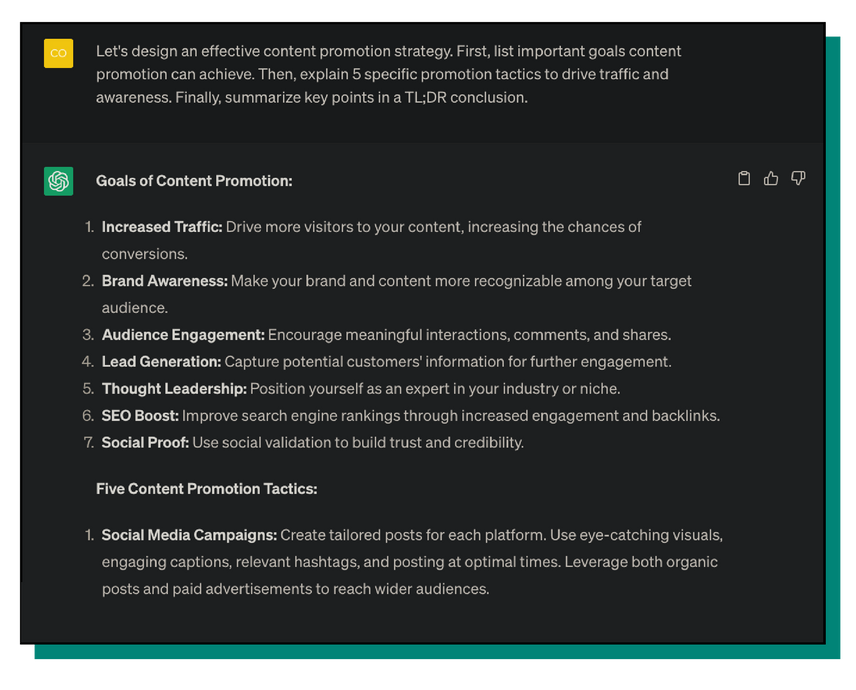

Chain-of-thought prompt

A chain-of-thought prompt technique refers to a series of connected prompts, all working together to help the model contextualize a desired task. ChatGPT, for example, not only remembers the context of previous questions and answers in a single chat, but this actually tends to make for better results, which we’ll discuss later. This type of ai prompting is very effective for complex tasks. This could look like you talking through what an effective content promotion strategy looks like.

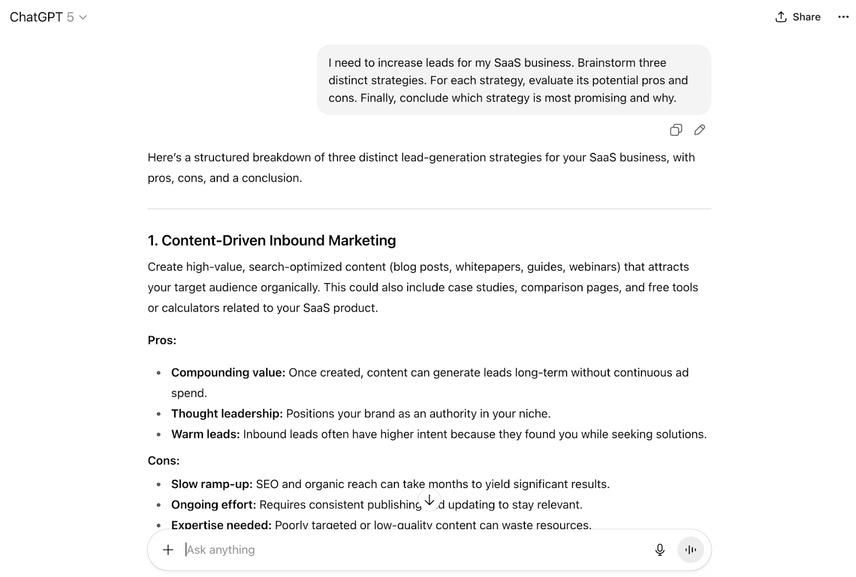

AMA prompt

Sort of an outgrowth of the chain-of-thought prompting, AMA prompting or ask-me-anything refers to an approach to prompt engineering that tries to encourage open-ended responses from the model. Simply put, AMA prompting seeks to ask open-ended questions to an LLM and essentially continue a conversation with it, refining their prompts as they go. Rather than looking for a single perfect prompt, AMA prompting leans more toward a conversation between the user and AI, relying on multiple imperfect prompts to drive the desired result.

Here’s an example of what an AMA prompt might look like:

- Step 1: I need to write a blog post about the benefits of using a CRM for a small business. First, generate a list of 5 critical questions a small business owner might have about this topic.

- Step 2 (after the AI generates questions): Excellent. Now, answer each of those five questions in detail.

Advanced prompting strategies

Sometimes the strategies we just explained don’t provide the consistency and accuracy you need from AI outputs. If you want to go deeper, you can get a bit more into the weeds to tackle more complex problems and improve the reliability and consistency of your results.

Self-consistency

This technique helps improve accuracy on tasks where there isn't a single correct answer. It involves generating multiple responses to the same prompt. This could be by using slightly different phrasing or adjusting the settings of the model. From there, you would select the most consistent or frequently occurring answer.

By identifying the common thread across several outputs, you can arrive at a more reliable and well-reasoned result.

Tree-of-Thoughts (ToT) prompting

Tree-of-thoughts is an advanced technique that allows an AI to more closely mimic human problem-solving. Instead of following a single line of reasoning, the ToT framework prompts the model to explore multiple reasoning paths simultaneously, like branches of a tree. The model then evaluates the viability of each path, allowing it to self-correct and choose the most promising route to find the best possible solution.

Recursive self-improvement

This involves creating a cyclical process for refining AI-generated content. You start by having the AI generate an output. Then, you prompt it to critique its own work based on a specific set of criteria, such as clarity, accuracy, or tone. Finally, you ask the AI to improve upon its initial response based on its own critique. You can keep repeating this feedback loop to enhance the quality of the AI’s final output.

Here’s an example of what a dialogue with the AI might look like in this case:

Example Dialogue:

You: Write a welcome email for new subscribers to my newsletter, "The Weekly Coder."

AI: [Generates a generic welcome email.]

You: Now, critique the email you just wrote. Is the tone engaging for a technical audience? Is the call-to-action clear?

AI: Critique: The tone is a bit too formal. The call-to-action could be more prominent and specific.

You: Great. Now, rewrite the email, addressing those two points of critique.

Common AI prompting pitfalls

Crafting the perfect prompt is as much about avoiding common mistakes as it is about applying advanced techniques. Here are some common issues and pitfalls you can sidestep to get better output from your prompts.

Overstuffing prompts

It’s best not to try to get an AI to do too many things at once. While it’s important to provide enough detail to get the job done, asking the model to perform several distinct tasks in one prompt can lead to confused or incomplete results. The output is much more likely to be high-quality and relevant if your prompt is focused on a single, specific goal. If you have a multi-step task, you may want to consider breaking it down into a series of individual prompts.

Being too vague

On the other side of the coin, not providing enough information will almost always result in a low-quality response. Be sure that you’re being specific and including all of the relevant details, context, examples, and nuances the AI needs to know in order to complete the task successfully. To ensure the model has everything it needs, you can even encourage it to ask clarifying questions before proceeding with the task.

Assuming AI has context and background knowledge

A frequent mistake is assuming the AI has the same background knowledge or context for a project that you do. The model has no awareness of your specific project goals, your company's brand voice, or the conversation you had with a colleague just before writing the prompt. While it’s true that models will remember previous context or resources that you’ve provided, it likely won’t keep that context across different chats.

Forgetting to provide this essential background information forces the AI to make guesses, which often results in generic or misaligned content. Always proactively provide any relevant context, define specialized terms, and tell the model what persona or role it should adopt to ensure the output is tailored to your situation.

How to structure your prompts for success

The way you organize your prompt can dramatically impact the quality of the output. Here are a few tips to help you structure your prompts for better results.

Use delimiters

Clearly separate distinct parts of your prompt like instructions, context, and input data using delimiters. These can be any clear separator, such as triple hashtags (###), quotation marks ("""..."""), brackets ([[[...]]]), or XML tags (<example>...</example>). This helps the model accurately tell different components of your prompt apart, reducing confusion and leading to a more precise response.

Be specific and detailed

Vague instructions lead to vague results. Instead of saying "write a short description," be specific about the length, format, style, and tone you’re going for.

For example: "Write a 3-sentence product description in a witty and informal tone, highlighting the product's durability and ease of use" is a better prompt than “Write a short, fun, punchy product description.” The AI has more guidelines and context to work from with the first option, which will almost definitely lead to stronger results.

The more specific your instructions, the closer the output will be to your vision.

Provide examples for output formatting

If you need the output in a specific format (like JSON, a markdown table, a content template, or a numbered list), show the model exactly what you want by including an example in your prompt. This is one of the most effective ways to ensure the structure of the AI's response meets your requirements, saving you significant time on reformatting. For example, say that you’re creating a piece of content for your travel & hospitality website about the top 10 winter getaway destinations, and you want to create a few emails to promote the article. You could take your promo email template and feed that directly into the chatbot with your prompt to ensure the AI matches that structure. You could even input previous email copy that performed well to help inform tone and brand voice.

However, be aware that unless you have specific enterprise licenses with these AI chatbots, it’s likely that any data or information you put into your prompts will be used to further train the AI model. So be careful of what you include in your prompts.

How do I manage my engineered prompts?

To successfully incorporate AI into your digital strategy, you need to create prompts and manage, document, and optimize them over time. What good, after all, are a bunch of engineered prompts if no one knows where to find them?

Create a prompt library

Whether you invest in a tool or keep a detailed spreadsheet, you should document the prompts you’ve created that you found valuable. This makes it easier for you, your team, and future hires to move quickly with AI generation rather than spending time experimenting to find the perfect prompt.

In your library, you should include any prompt that has driven a strong output. Be sure to document not just the prompt itself, but also its purpose, the AI model it was designed for, and an example of the output it produced. Consider categorizing prompts by task (e.g., "Meta description generation," "Blog post outlines," "Social media copy") to make them easier to find.

Your prompt library can be as simple as a shared spreadsheet or a more robust solution like a Notion or Airtable database. For larger teams, dedicated prompt management tools are also available from companies like Microsoft, OpenAI, and Google. The key is to choose a centralized, accessible location where your team can easily find and contribute to your prompt collection.

Hire a prompt engineer

Whether you need to hire a full-time prompt engineer depends on the size of your company, the bandwidth and budget of your team, and dozens of other factors. Before you make any decisions here, consider how AI folds into your strategy today and how you’d like to incorporate it tomorrow.

If AI is a significant initiative your team is pushing, it may be worth adding headcount to manage your prompt library. But remember, AI companies are updating their tools constantly, trying to bring a better product to market. As they do, the tools will get better at understanding what users are asking for, and the need for highly curated prompts may wane.

Optimize your prompts over time

Like anything else, you’re not finished engineering a prompt once you find the perfect combination of words on ChatGPT. For one thing, a prompt you use to great success on ChatGPT could bring a completely different result when you input it into another tool.

In addition, AI tools are constantly improving, and that improvement is happening fast. OpenAI is already on version 5 of ChatGPT, and who knows when another update may come. As these tools improve, your tried and tested prompts may not give you the same result they previously did.

Consider an AI Writing Assistant

If managing your prompts over the long haul seems like too much effort to add to your existing workflows, or if you want a solution that handles this work for you, you could opt for an enterprise AI platform.

AI writing assistants like Conductor Creator often have prompt engineering built into their backend. This allows you to get high-quality, optimized content without needing to become a prompt expert yourself, as the tool is already tuned for the required task.

Prompt engineering in review

Prompt engineering is key to maximizing your use of generative AI. If you don’t have a firm handle on how to make effective prompts, you may be wasting time rather than saving it, which is, after all, the primary goal of AI. But when it comes to prompt engineering, the most important thing to keep in mind is that creating effective prompts is a continuous process and one that will only change as AI continues to improve. Invest the time to experiment with generative AI and your prompts. You won’t know what works until you try.