What is Query Fan-Out in AI Search and Why Does it Matter?

Traditional SEO strategies are no longer enough to stay visible in an AI-driven search landscape. While ranking for a single keyword used to be the goal, AI engines now fan out user intent into dozens of specific sub-queries.

If your content doesn't address these hidden layers of intent, you risk being ignored by the very models your customers are using.

Search has evolved beyond simple keywordKeyword

A keyword is what users write into a search engine when they want to find something specific.

Learn more matching. Thanks to the rise of AI Overviews, answer engines, and zero-click search, users aren’t limited to using a few words to get information quickly; they can ask much more complex and nuanced questions.

To provide comprehensive answers, LLMs and AI search engines use a technique known as query fan-out, and it’s critical in determining whether your content gets cited in an AI answer or ignored entirely. If you’re struggling to understand why your high-ranking pages aren't appearing in AI snapshots, query fan-out is likely the missing piece of the puzzle.

Here is what you need to know about query fan-out, how it impacts your visibility, and how to optimize your enterprise AEO / GEO and SEO strategies for this new reality.

What is query fan-out?

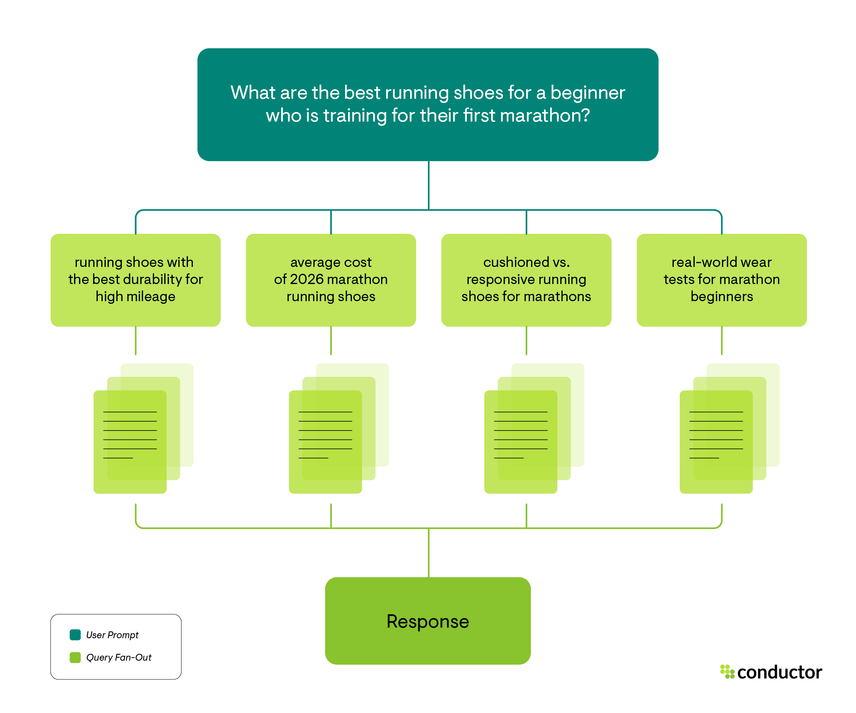

Query fan-out is a retrieval technique used by AI search systems to break a single, complex user prompt into multiple, distinct sub-queries.

Rather than treating a user’s input as one isolated search termSearch Term

A search term is what users key into a search engine when they want to find something specific.

Learn more, the AI expands, or “fans out,” the intent, generating a series of related questions to gather a complete set of information on the core topic. The LLM runs these searches all at once to gather different perspectives, then combines everything it found into a single, easy-to-read answer.

For example, if a user searches for the best running shoes for marathon training, a traditional search engineSearch Engine

A search engine is a website through which users can search internet content.

Learn more looks for pages that are optimized for that exact long-tail keyword.

On the other hand, an AI model using query fan-out might generate the following sub-queries before providing an output:

- Top-rated marathon running shoes in 2026

- Running shoes with the best durability for high mileage

- Cushioned vs. responsive running shoes for marathons

- Price comparison of top marathon shoes

The AI retrieves answers for all of these sub-questions and combines them.

For brands, that means your content might be cited not because you ranked for the main keyword, but because your content had the best answer for one of the specific sub-queries.

While the concept of query expansion isn't entirely new, the term gained significant traction with the introduction of Google’s AI Mode (and AI Overviews). Ultimately, it’s a fundamental shift in how search engines and LLMs process intent.

How does query fan-out work in LLMs?

To optimize for AI search, you have to understand the mechanics of how these models understand and retrieve data. The process of query fan-out generally follows this four-step workflow:

- Query decomposition: This is the fan-out phase. In short, the AI acts as a researcher, brainstorming a list of questions it needs to answer to satisfy the user completely. When a prompt enters the system, the LLM analyzes the semantic intent. It identifies that the user’s request is too broad to be answered by a single source. The model then decomposes the main query into smaller, more digestible parts.

- Parallel information retrieval: The system searches for the list of sub-queries across its index or the live web. This is a key difference from traditional search: the AI is performing multiple searches on the user's behalf instantly. It might look for pricing data in one place, technical specifications in another, and user reviews in a third to craft the most complete and accurate answer possible.

- Source evaluation and extraction: The AI then analyzes the top results for each sub-query. It extracts specific facts, figures, steps, or commentary that directly address that specific query. This is where schema and content structure become critical; if the AI can’t easily understand your text, it can’t extract an answer from your content to cite in its response.

- Synthesis and generation: Finally, the model aggregates the information it pulled and weaves the cited facts into a single natural language response, whether that’s in a chatbot like ChatGPT or an AI Overview in traditional SERPs.

Why do LLMs use query fan-out?

LLMs use query fan-out to solve the problem of ambiguity and hallucination.

LLMs are prediction engines, and they will always give you an answer to your question. Even if you ask a vague question, a model without query fan-out will answer anyway, significantly increasing the likelihood of hallucinations. By fanning out the query, the model grounds its response in specific facts it retrieved.

Query fan-out also allows for personalization and depth. A user asking how to fix a leaky faucet might need tools, steps, or a plumber. By expanding the query to cover tools needed for faucet repair, step-by-step faucet repair, and the cost of a plumber, the AI provides a helpful, all-encompassing guide that improves user satisfaction.

The impact of query fan-out on search

The impact is massive for digital marketing teams. In the past, winning in search meant ranking first for high-value keywords. With query fan-out, what it takes to win looks a lot different.

- Visibility is fragmented. You might not rank for the main query you’re targeting, but if you have the best content for a specific sub-query, for example, the durability angle of the running shoes, you can still earn a citation in the AI answer.

- Content authority is cumulative. AI models tend to cite sources that cover a topic holistically. If your domain covers the main topic and all the potential fan-out sub-topics, the AI is more likely to view your site as a comprehensive authority, potentially citing you multiple times within a single answer.

How is query fan-out different from traditional search?

While traditional search algorithms have long used synonyms and related searches to inform their responses, query fan-out differs in its goal and how it gets there.

- Traditional search is reactive, not predictive. It takes the keywords provided and looks for matches. Query fan-out is predictive. It anticipates what the user really needs to know, even if they didn't ask for it explicitly. It assumes the user wants the whole picture rather than just a list of links.

- Traditional results are linear lists, not comprehensive personalized answers. You rank for X, or you don't. Query fan-out creates a multi-dimensional result. The final answer is a patchwork quilt of information. A single AI response might cite a retailer for pricing, a blog for reviews, and a manufacturer for specs. In traditional search, these would be competing for one spot. In AI search, they can coexist in the same answer.

- Traditional search aims to route users to a website. The goal of query fan-out is to assemble a complete answer directly on the results page. While this can reduce click-through rates for simple queries, it increases the value of being the source for complex, high-intent questions where the user eventually clicks through for a deep dive.

Why is query fan-out important for AI search visibility?

If you ignore query fan-out, you’re basically optimizing for yesterday’s search landscape.

As Google and other platforms shift toward AI-first experiences, your brand’s visibility depends on how well your content covers a topic at large. If your content strategy focuses narrowly on specific keywords, you’re leaving gaps that competitors will fill.

When an AI fans out a query, it creates a list of requirements. If your content only satisfies one of those requirements, you might get a mention. But, if your competitor created a comprehensive topic clusterTopic Cluster

A topic cluster is a content strategy organizing related pages around a central hub to establish authority, with cluster pages covering subtopics.

Learn more that answers the main question and four or five of the likely sub-questions, the AI is far more likely to prioritize their content and trust them as a go-to source of truth.

Query fan-out also helps explain why you might lose visibility even if your technical SEO is perfect. If your content is thin and doesn’t fully cover the nuances of a topic and fails to address the questions the AI predicts the user has, the model will look somewhere else to fill those knowledge gaps.

How can I measure my query fan-out coverage?

Measuring performance in this new environment is one of the top pain points for enterprise brands. Traditional rank tracking shows where you sit on a list of blue links, but it doesn't tell you if you were cited in the AI answer for a specific subquery.

You can’t manually guess every sub-query an AI might generate and start tracking them. The variations are infinite and personalized.

To effectively measure your coverage, you need to move beyond keyword rankingsRankings

Rankings in SEO refers to a website’s position in the search engine results page.

Learn more and look at share of voice in AI Overviews and answer engines. Here’s how you can get started measuring your query fan-out coverage:

- Identify content gaps: You need to identify where your brand is being mentioned and cited and, more importantly, where it’s not. If you rank organically for a term but fail to appear in an AI response, it’s a strong indicator that your content is missing the comprehensive topical coverage the AI is looking for.

- Leverage an enterprise AEO/GEO platform: This is where Conductor Intelligence becomes essential. You need a platform that provides unified insights across traditional and AI search. With Conductor, you can track visibility in LLMs and AI Overviews, helping you understand which topics you’re winning and where competitors are stealing market share with better topical coverage.

By analyzing the keywords and queries where you appear in AI answers, you can reverse-engineer the fan-out logic and adjust your content strategy accordingly.

How to optimize content for query fan-out

Optimizing for query fan-out means you’re no longer writing to target a single keyword. Instead, you’re writing to satisfy a web of related queries and intents. Here is how to execute this at an enterprise scale.

Leverage topic clusters

The most effective way to align with query fan-out is to build comprehensive topic clusters.

Topic clusters make it easy for search engines and LLM bots to connect pieces of your content. This helps build topical authorityTopical Authority

Topical authority is the expertise and credibility a website demonstrates on a subject through comprehensive, interconnected, high-quality content.

Learn more as the bots see that you not only understand the topic on its own, but also how it impacts other semantically related topics.

Instead of a single 2,000-word blog post that touches lightly on ten different sub-topics, consider a hub-and-spoke, AKA topic cluster model. Create a pillar page that addresses the broad query, then link out to detailed supporting pages that answer the specific sub-queries.

For example, let’s say you’re a B2B software company selling a CRM. Here’s how you might approach building a topic cluster:

- Pillar page: Ultimate Guide to CRM Implementation.

- Cluster pages: CRM cost comparison, CRM security features, CRM for small business vs. enterprise, CRM data migration checklist.

This structure tells the AI that your brand has the answer to the main question and the follow-up questions it will generate.

Create high-quality, helpful content

One thing that hasn’t changed from the days of SEO is the critical role that helpful content plays in your brand’s success.

AI models are trained to prioritize content that directly answers questions. Fluff, marketing jargon, and lengthy intros make it more difficult for LLM bots to understand your content, making it much less likely that they’ll mention or cite it.

To optimize for fan-out:

- Answer the question immediately: Don’t bog down your content with a long intro that doesn’t provide value to the reader. The longer it takes for the LLM bot to find the relevant content, the more likely it is to leverage another source.

- Optimize your content structure: Use clear H2s and H3s that mirror likely sub-queries, for example: How much do marathon running shoes cost? Or: Why does choosing the right CRM matter?

- Add context: Go beyond the surface-level answer by including supporting data, expert quotes, or related examples. Providing these extra layers of detail helps the AI categorize your content as a source worthy of being cited and mentioned.

Conductor Creator helps you analyze top-ranking content, and AI answers to identify the entities and questions your content is missing, ensuring you hit the quality marks required for citations.

Leverage schema and structured data

AI bots and AI agents are machines that rely on structure to understand the meaning of a piece of content. Schema markup is your way of speaking the AI's language.

By marking up your content with different types of schema, you explicitly tell the search engine what your content is about. This reduces the amount of time the AI has to spend processing the content.

If the AI expands a query to find pricing for running shoes, and your page has clear Product Schema, you are significantly more likely to be selected as the source for that data point.

In complex enterprise environments, managing schema at scale is difficult. Conductor Monitoring alerts you to broken schema or technical issues that might be blocking bots from extracting this critical data, ensuring your technical foundation supports your content strategy and helps you achieve increased search visibility.

Quick experiment: Test with public LLMs and answer engines

If you want to understand what sub-queries matter for a priority topic, go directly to the source.

Open a tool like ChatGPT, Perplexity, or Gemini. Enter your target keyword, like enterprise cloud security, and analyze the response.

- What subheadings did it create?

- What follow-up questions does it suggest?

- What sources did it cite, and why?

If the AI breaks the topic down into compliance, data encryption, and remote access, check your content. Do you have dedicated sections or pages for these three aspects? If not, you have a fan-out gap.

Long-term win: Leverage an AEO/GEO visibility platform

While manual testing is great for one-off spot-checking, it’s not a sustainable measurement solution. You can’t manually prompt LLMs for thousands of keywords every week.

To properly ensure query fan-out coverage and AI search visibility, you need an enterprise AEO/GEO platform, like Conductor, to measure performance and seamlessly optimize your content strategy at scale.

Conductor mini case study

Navy Federal Credit Union’s approach to its Auto Loans page perfectly illustrates how to optimize for the way modern AI models handle fanned-out queries.

Originally, the page followed a traditional product-first structure, focusing on the features the credit union wanted to highlight. However, conversational query analysis in Conductor revealed that users (and the AI agents acting on their behalf) were actually fanning out into highly specific, intent-driven questions:

- Can I refinance my car loan with bad credit?

- Does Navy Federal require a down payment?

- How long does preapproval take?

By restructuring the page around these natural language patterns and using Conductor’s Writing Assistant to refine headings, Navy Federal ensured that when an AI engine "fans out" a broad auto loan search into specific sub-intents, their content is the most relevant answer available.

Plus, the addition of schema markup made it even easier for AI engines to parse, cite, and serve Navy Federal’s data as the definitive response.

According to Conductor’s analytics, this shift in content architecture led to a significant measurable impact:

- 18% increase in page sessions and higher engagement with high-intent tools like rate calculators.

- Dominant AI visibility: The page now frequently appears as a cited source in results from ChatGPT, Copilot, and Perplexity.

- Increased referral traffic: A noticeable uptick in traffic specifically driven by AI-powered search platforms.

By aligning its content structure with the logic of query fan out, Navy Federal became a trusted authority for the next generation of search.

Query fan-out in review

Query fan-out represents the next stage of search. It moves away from basic keyword targeting and into the fluidity and personalization of contextual search. For enterprise digital teams, this is an opportunity to differentiate.

By understanding how AI breaks down queries and optimizing your content to answer the resulting sub-questions, you can secure your brand in the next generation of search results. This requires a unified approach that combines technical health, structured dataStructured Data

Structured data is the term used to describe schema markup on websites. With the help of this code, search engines can understand the content of URLs more easily, resulting in enhanced results in the search engine results page known as rich results. Typical examples of this are ratings, events and much more. The Conductor glossary below contains everything you need to know about structured data.

Learn more, and deep, clustered content.